ROSS Intelligence, the artificial intelligence legal research platform, outperforms Westlaw and LexisNexis in finding relevant authorities, in user satisfaction and confidence, and in research efficiency, and is virtually certain to deliver a positive return on investment.

These are among the findings of benchmark report being released today by the technology research and advisory company Blue Hill Research pitting ROSS against the two dominant legal research services. The full report will be available for download at the ROSS Intelligence website.

The study, which ROSS commissioned, assigned a panel of 16 experienced legal research professionals to research seven questions modeling real-world issues in federal bankruptcy law. Researchers were divided into four groups, with each group constrained to perform the research using a particular method:

- Boolean search, in which researchers used only Boolean keyword search capabilities of major legal research platforms.

- Natural language search, in which researchers used only the natural language search capabilities of major legal research platforms.

- ROSS and Boolean Search, in which researchers were directed to use the ROSS platform and Boolean keyword search capabilities of major legal research platforms as they saw fit.

- ROSS and natural language search, in which researchers were directed to use the ROSS platform and natural language search capabilities of major legal research platforms as they saw fit.

Of the Boolean and natural language search tools, researchers were assigned a mixture of Westlaw and LexisNexis tools. In addition, members of each research group were limited to individuals with no prior experience with the assigned tool and relatively minimal experience with bankruptcy law.

Research results were benchmarked against three assessment factors:

- Information retrieval quality, including thoroughness, accuracy and ranking effectiveness.

- User satisfaction, including ease of use and confidence in answer obtained.

- Research efficiency, based on time to obtain a satisfactory answer.

The study concluded that ROSS generated better search results across all three benchmarks. “These findings indicate clear advantages resulting from the addition of the ROSS tool to electronic legal research involving traditional tools,” the report concludes.

Information Retrieval Quality

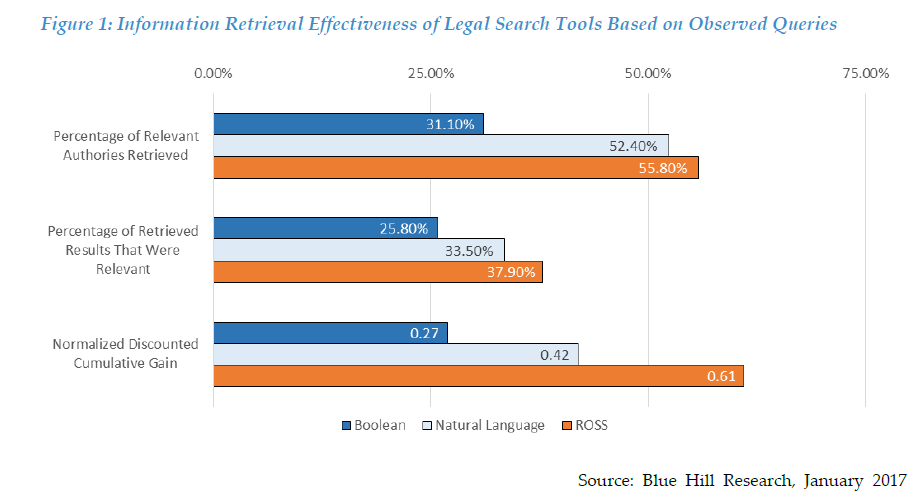

To benchmark the quality of information retrieval using the different research methods, Blue Hill compared their performance in three categories:

- Thoroughness, or the number of relevant authorities identified.

- Accuracy, or the amount of retrieved results that represented relevant authorities.

- Normalized Discounted Cumulative Gain (NDCG), a standardized measure of the ranking of search results compared to an idealized ranking according to the relative value each result has to a user.

The chart at the top of this post shows the results. “On every measure, ROSS outperformed the traditional tools evaluated,” the report says.

Perhaps most striking about the findings was what they showed about Boolean search. Boolean tools retrieved less than a third of relevant authorities within their fist 20 results and only 25.8 percent of their results were relevant authorities.

By comparison, ROSS retrieved 55.8 percent of the relevant authorities within its top 20 results and 37.9 percent of results were relevant authorities.

ROSS most significantly outperformed Boolean and natural language tools in its ranking of results, the report found. Using the NDCG standard described above, ROSS achieved a score of .61, which was 46.1 percent higher than natural language and 127.8 percent higher than Boolean.

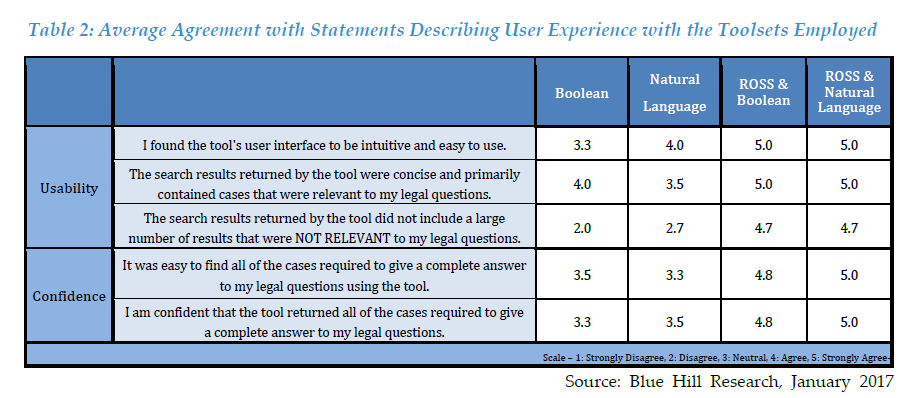

User Satisfaction and Confidence

When they completed their assignments, the researchers took a user satisfaction survey. This chart shows their responses.

The report summarizes it this way:

[T]he survey results summarized in Table 2 reveal strong indicators of user satisfaction of the participants using a ROSS-supported toolset with respect to the usability, presentation of search results, and inclusion of relevant authorities within the search results. Participants within these groups also indicated high levels of confidence in the ability of the tool to identify all authorities relevant to the matter. In nearly all cases, the responses indicated by participants using ROSS and another tool often exceeded those of organizations using only Boolean search or only Natural Language search by at least a full point.

The report further finds that there is a correlation between the effectiveness of the research tool and user perceptions. “Anecdotal responses … suggest that the ROSS tool’s higher concentration of relevant authorities among initial search result positions played a role with the higher satisfaction with the ease of use and confidence,” the report says.

Research Efficiency

In terms of research efficiency, the study found that the ROSS users reduced their research time by 30.3 percent over users of Boolean search and 22.3 percent over users of natural language search.

The Boolean researchers completed their tasks in 52.3 minutes, on average. Natural language researchers were a bit faster, completing their tasks in 47.2 minutes. By comparison, the group using ROSS and Boolean completed their tasks in 36.5 minutes, on average, and the ROSS and natural language group finished in 36.7 minutes.

The study also looked at the time it took researchers to write their answers to the research question. Here, they found no correlation between the research tools used and the time to write the answer.

Analyzing the Business Case

Based on these findings, Blue Hill went on to quantify how the research efficiency it found using ROSS would impact the bottom line for law firms and legal organizations. To do this, it compared the net gain that ROSS provides against the cost of acquiring it.

Blue Hill’s analysis looked at how the reduction in research time from using ROSS could be converted to productive, revenue-generating work. It estimated that for an associate billing $320 an hour, the conversion could generate revenue of from $5,306 if 10 percent of the saved time is converted to $61,868 if 100 percent of the saved time is converted.

That translates to a return on investment of anywhere from a low of 10.5 percent to a high of nearly 2,500 percent, again depending on the extent of the conversion. From the report:

Notably, using Blue Hill’s estimated billable rate of $320, a positive ROI is obtained at a 10% conversion rate, meaning that the investment drives a net gain to the firm with a minimal recovery of written-off hours. Similarly, a 176.4% to 544.5% ROI becomes possible with at least 25% conversion. Where exactly an organization will fall within these ranges will depend on a variety of factors related to its business, investment costs, and the scope of use adopted. In all cases, however, Blue Hill’s model strongly suggests positive business gain is available from the investment in ROSS.

Takeaways from Blue Hill and Me

The benchmarking report provides this conclusion:

Blue Hill finds that the ROSS tool provides significant, additive contributions to the effectiveness of legal researchers. … These results have the potential to unlock new gains in the efficient and profitable operation of legal organizations, as well as create opportunities for new revenue gain.

It also provides this caveat:

It should be noted that none of these findings indicate that AI-assisted legal research constitutes a dramatic transformation in the use of technology by legal organizations. Rather, the use cases and impact reviewed indicate that tools like ROSS Intelligence more closely represent a significant iteration in the continuing evolution of legal research tools that began with the launch of digital databases of authorities and have continued through developments in search technologies.

I would add some caveats of my own about this study:

- First, as noted at the outset, ROSS commissioned it. However, Blue Hill is a well-regarded independent research firm and the fact that ROSS commissioned it should not detract from its findings.

- Second, it is important to emphasize that the researchers did not use ROSS alone. They used it in conjunction with other tools to assist the overall effectiveness of their research. For the researchers using ROSS and either Boolean or natural language search, we do not know how they balanced the two tools.

- Third, the research was limited to bankruptcy law, which is ROSS’s home turf, so to speak. Bankruptcy was the first area of law for which ROSS was designed to be used.

- Fourth, this is not a “robots replacing lawyers” study. ROSS was used here as a tool to assist lawyers in research, not to perform research in their place.

All of that said, the significance of this report is major. Even as AI tools continue to make inroads in various segments of the legal profession, there remain many who question their real effectiveness and value and there has been a lack of evidence to assuage those questioners.

No doubt there will be other studies and other benchmarking reports of AI in the legal profession. But this study provides tangible evidence that AI not only works but, when used in conjunction with established research tools, can deliver measurably better results and measurable ROI.

Robert Ambrogi Blog

Robert Ambrogi Blog