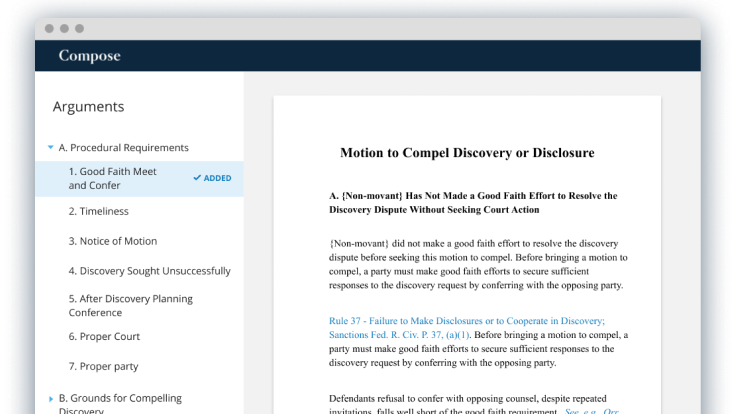

In what Casetext cofounder and CEO Jake Heller calls a breakthrough that will have a profound impact on the practice of law, the legal research company is today launching Compose, a first-of-its-kind product that helps you create the first draft of a litigation brief in a fraction of the time it would normally…

Robot Wars Round 2: Faux Face Off of the AI-Driven Brief-Analysis Tools

In this corner: CARA, the original AI-powered brief-analysis tool introduced in 2016 by the legal research company Casetext.

In the other corner: Well, no one really, except empty seats behind name cards bearing the names of other brief-analysis products and an audience of curious onlookers.

But its competitors’ no-shows did not stop Casetext from…

Casetext Adds Public Records Search through Partnership with Tracers

Subscribers to the legal research company Casetext can now get access to public and business records at a reduced cost, as the result of a new partnership between the company and Tracers, a provider of public records data to law firms, software integrators, technology partners and others.

Tracers has long been providing public…

Price Wars in Legal Research Mean Deals for Small Firms; I Compare Costs

LexisNexis has quietly introduced transparent, flat-rate pricing for one- and two-lawyer law firms, with plans starting at $75 a month. This is good news for solo and small firms, and reflects the increasing array of legal research options they can choose from. But exactly how do those options stack up?

The long-established legal research…

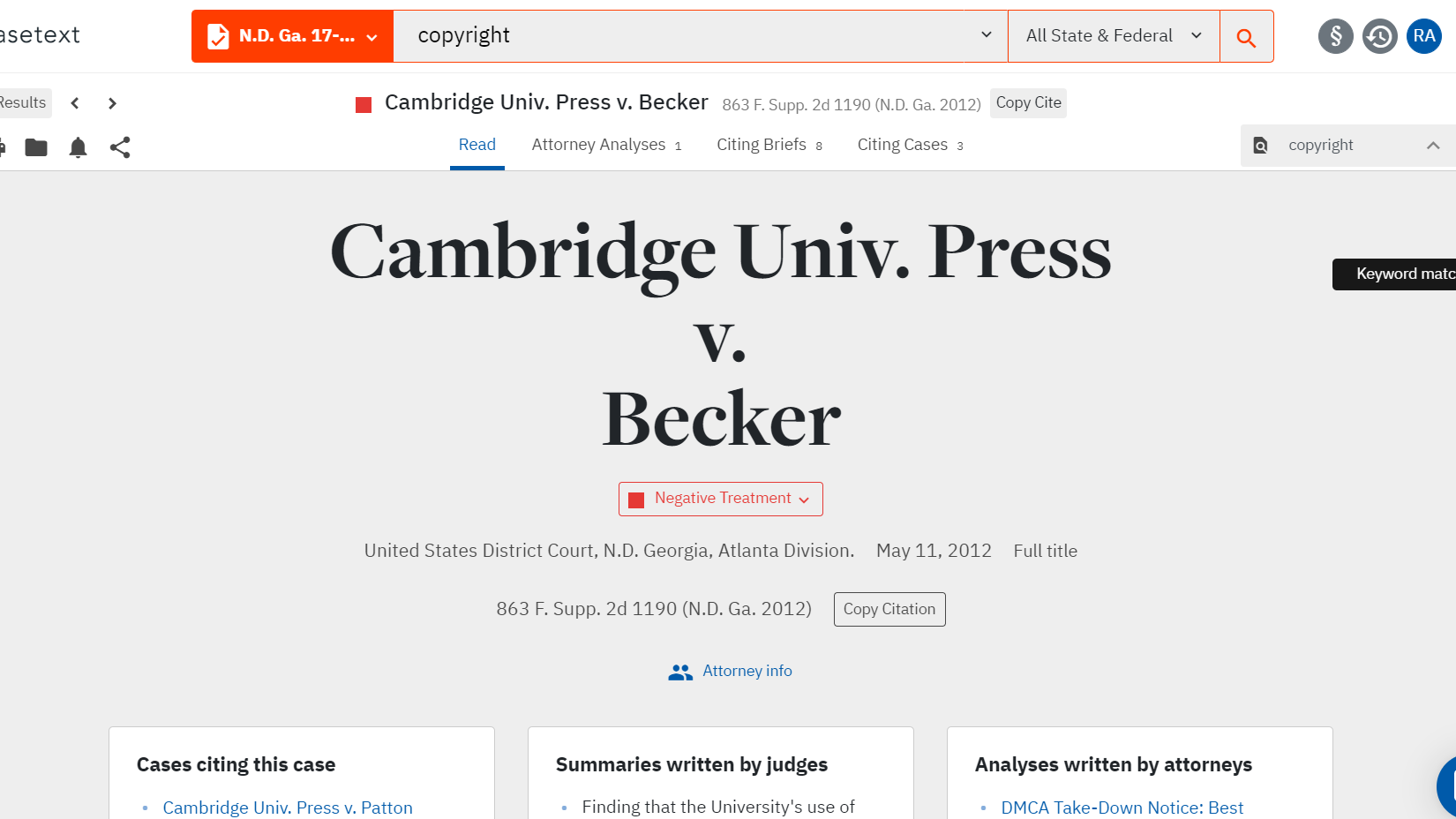

Casetext’s New ‘SmartCite’ Citator Is Its Clever Answer to Shepard’s and KeyCite

Knowing whether a case is good law is elemental to legal research. To do this, lawyers have long relied on citator services such as Shepard’s from LexisNexis and KeyCite from Westlaw. Now, the legal research service Casetext has introduced a citator of its own, called SmartCite, with many of the features you would…

Mike Whelan of Lawyer Forward Moves to Casetext as Managing Editor

Mike Whelan Jr., founder of the Lawyer Forward conference and an attorney who practiced law in Rockport, Texas, has joined the legal research company Casetext as managing editor, where he will be responsible for shaping and executing the company’s communications strategy, including podcasting, blogging and social outreach.

Study Says Casetext Beats LexisNexis for Research, But LexisNexis Calls Foul

A study released this week pitted two legal research platforms against each other, Casetext CARA and Lexis Advance from LexisNexis, and concluded that attorneys using Casetext CARA finished their research significantly more quickly and found more relevant cases than those who used Lexis Advance.

The study, The Real Impact of Using Artificial Intelligence…

Winners Announced of 2018 ‘Changing Lawyer’ Awards

In June, I wrote about a new legal industry award intended to honor innovation in legal practice and legal technology, The Changing Lawyer Awards, for which I was to be one of the judges. Now, the results are in.

The sponsor of the awards, Litera Microsystems and its online publication The Changing Lawyer, announced…

LawNext Episode 3: Casetext’s Founders on their Quest to Make Legal Research Affordable

Seeking To Expand Among Smaller Firms, Legal Research Service Casetext Adds Features, Lowers Price

When the legal research service Casetext was first launched in 2013, its founders wanted to democratize access to the law by providing free access to legal research enhanced through crowdsourced annotations. As the company grew and took on venture capital, it increasingly targeted its sales and marketing to the large-firm market and implemented…

World Economic Forum’s List of ‘Technology Pioneers’ Includes Just One from Legal

The World Economic Forum, a Geneva-based nonprofit focused on entrepreneurship in the global public interest, has recognized 61 early-stage companies as Technology Pioneers for their design, development and deployment of potentially world-changing innovations and technologies. Of the 61, only one is a legal technology company.

That one is Casetext, the legal research…

In Survey, Judges Say Lawyers’ Incomplete Research Impacts Case Outcomes

Most judges in a survey say that they see lawyers miss relevant precedent in their legal research and that those missing cases have impacted the outcome of a motion or proceeding.

The legal research company Casetext surveyed 66 federal and 43 state judges to learn whether missing precedent over affects the outcome of a…

Robert Ambrogi Blog

Robert Ambrogi Blog